Kris T. Huang, MD, PhD, CTO

The 1990s brought progress from chamfer matching to voxel intensity, and it ushered in the idea that intensity relationships between images could be non-linear, hinting at the concept of a more general dependence between images.

Joint histograms

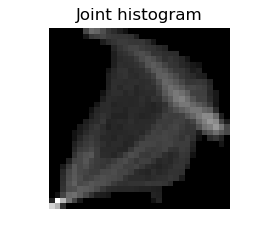

Image registration is typically performed with images from the same patient. So, although the notion that those images should have something in common seems obvious or intuitive, even if from different modalities, the expression of that correlation mathematically wasn’t obvious or straightforward. One of the first clues surfaced from looking at the 2D histogram, which provides a visual of how the gray levels of one image relates to the levels in the other1:

Example 2D histograms showing signal dispersion, or diffusion, when the images are intentionally misregistered by a known amount. Figure adapted from the Handbook of Medical Image Processing and Analysis (Second Edition), 2009.

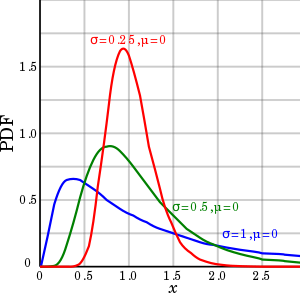

In the 2D histogram, also known as a joint histogram, the distribution of points is qualitatively brightest and tightest when the images are optimally aligned. When the images are out of alignment, the histograms exhibit dispersion of the distribution, appearing more blurry with lower intensity. The cost function, which distills this quality down to a single number for comparison, that best described this phenomenon was found to be the third moment of the histogram. Also known as skewness, the third moment is a measure of the “lopsidedness” of the histogram. This concept is difficult to visualize in a 2D histogram, and is better demonstrated in one dimension:

Example of probability distributions with the same mean but different amounts of skew, with the red line having the least. Image credit: Wikipedia

Skewing a distribution not only shifts the curve in one direction, it widens (disperses, diffuses, or blurs, take your pick) it as well. Because the distribution still has the same area under the curve, this widening necessarily causes the peak value (intensity) to decrease.

Joint probability and joint entropy

The joint histogram can also be interpreted as a joint probability distribution, giving the probability that a particular gray level from one image maps to some gray level in the other image. This information might be useful if, for example, you know image A and want to predict image B, but it doesn’t directly measure how dependent they are.

In 1995, researchers again keyed in on the observation that misregistration causes a diffusion-like effect on the joint histogram, this time connecting it with the idea of information entropy, on the grounds that diffusion of the joint histogram “corresponds to an increase in the information required to describe it”2. The notion of a diffusion-like process naturally brings forth notions of thermodynamic entropy from chemistry and physics, and perhaps it is no coincidence that the formula for entropy in statistical thermodynamics (which in turn reproduces the definition of entropy from classical thermodynamics at the thermodynamic limit) closely resembles the one for information entropy. It’s nice when visual intuitions directly lead to an intersection of ideas!

The mutual information wave

Once the connection to information theory and joint entropy was made, it didn’t take long to make the formal connection to mutual information (MI)3. The problem with entropy is that it only encapsulates information about the underlying joint probability distribution, not the meaning of the events themselves. MI attempts to improve on joint entropy by subtracting out the contribution that would be expected coincidentally if the images were statistically independent. What’s left should be the information that is shared between the images to register. As opposed to, say, the Pearson correlation coefficient, which measures the strength of a linear correlation, or the Spearman rank correlation coefficient, which looks for a monotonic relationship, MI “detects” any relationship, making it suitable for use with multimodality registration.

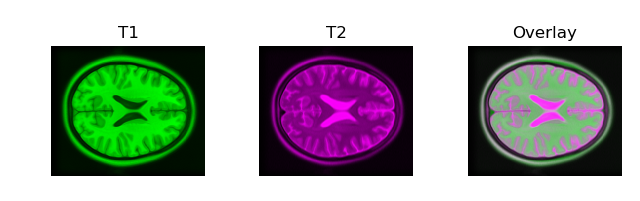

Example images from ICBM 152 Nonlinear atlases version 2009, shown using image fusion-style green/magenta colormaps.

Joint histogram of the above images, using 32×32 bins. This serves as an approximation of the joint probability distribution.

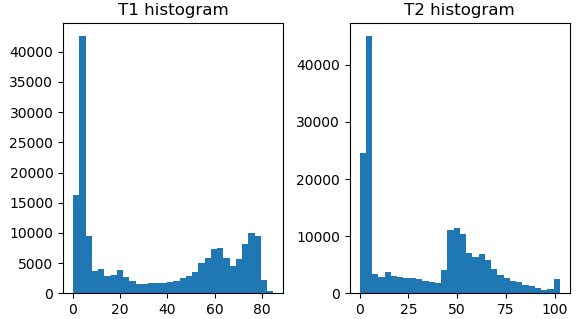

Gray scale histograms of the T1 and T2 images from above. These can be derived from the joint histogram and are essentially approximations for the marginal probability distributions.

The effect of misregistration on the joint histogram and mutual information.

We’ve only considered rigid registration since it is computationally simpler, and up to this point, the mid-1990s, the papers discussing medical image registration have focused on images of the brain, which being typically encased in bone, doesn’t, or at least hopefully shouldn’t, deform (much). However, computational power increased with time, and by 2001 MI was being applied to deformable image registration4 by sampling patches of both images to generate the joint histogram. For all the success MI brought, it lacked a clear, deterministic (non-probabilistic) mathematical explanation until 20145.

As the medical image registration community was getting acquainted with MI in 1995, another contender waits in the wings, while the seeds for Autofuse await the next century…

References

- Hill, D. L., Studholme, C. & Hawkes, D. J. Voxel similarity measures for automated image registration. in (ed. Robb, R. A.) 205–216 (1994).

- Collignon, A., Vandermeulen, D., Suetens, P. & Marchal, G. 3D multi-modality medical image registration using feature space clustering. in Computer Vision, Virtual Reality and Robotics in Medicine (ed. Ayache, N.) 905, 193–204 (Springer-Verlag, 1995).

- Collignon, A. et al. Automated Multi-Modality Image Registration Based on Information Theory. Lect Notes Comput Sci 3, (1995).

- Mattes, D., Haynor, D. R., Vesselle, H., Lewellyn, T. K. & Eubank, W. Nonrigid multimodality image registration. in (eds. Sonka, M. & Hanson, K. M.) 1609–1620 (2001).

- Tagare, H. & Rao, M. Why Does Mutual-Information Work for Image Registration? A Deterministic Explanation. IEEE Transactions on Pattern Analysis and Machine Intelligence 1–1 (2014). doi:10.1109/TPAMI.2014.2361512