Kris T. Huang, MD, PhD, CTO

Artificial intelligence, deep learning, machine learning – it’s popping up everywhere. When we hear those words, we think massive computers, convolutional neural nets, algorithms, and lifetimes of training data. And yet, my daughter, at 4 years old with zero radiology training, was able to register a pair of 3-dimensional CT scans in seconds, when specialized, purpose-built medical-grade software had difficulty. Machine learning systems are vaguely based on the brain, but clearly there is something missing.

That something is perception. My daughter can perceive the images simultaneously whole and in parts to efficiently search the entirety of both for correspondences. The visual perception pathway in humans is so successful evolutionarily that some variant of it is shared by all vertebrates, from other mammals all the way to reptiles and fish. Despite this, we’ve largely ignored the perceptual part of intelligence in the rush for AI and machine learning. By being overly eager to be at the forefront of AI and machine learning developments and applying them to anything and everything, humans might very well be overlooking other, and perhaps better, approaches to the problem at hand. Over the next few issues we’ll take a closer look at the disconnect between current approaches to machine learning, how humans learn, and the real world.

The power of machine learning is undeniable. However, perhaps we should develop machine perceptual processes to keep our machine models anchored to reality.

But first, what is machine perception?

Machine perception is the ability of a machine to take sensory inputs and interpret the data in a human-like way. Basic visual tasks, such as registration, map directly to the visual cortex in the brain, as shown by decades of neuroscience research. It turns out that the visual cortex is exquisitely hardwired for the motion tracking and object recognition task, and that much of these functions do not require extensive training.

Can your neural network do this… with a training set of one? Image from: Lowe, DG. IJCV 60, 91–110 (2004).

Can your neural network do this… with a training set of one? Image from: Lowe, DG. IJCV 60, 91–110 (2004).

3D image registration and machine perception

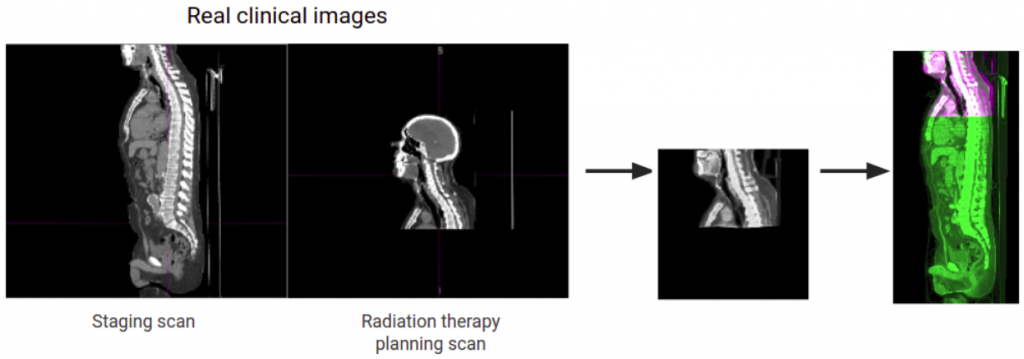

3D deformable image registration (DIR), which aligns 3D scans in a flexible rather than rigid fashion, is the basis for tracking body motion, disease progression, response to treatment, and combining information from multiple scans. However, it is a painstaking and time-consuming task to perform manually. Automating DIR can greatly simplify radiology and radiation oncology workflows, increasing consistency, efficiency, and productivity.

Machine perception allows software to emulate the robustness and accuracy of human vision, but with the speed, precision, and tirelessness of a computer. The machine perception technology in Pymedix Autofuse enables unparalleled robustness and accuracy with unprecedented reliability.

Can your image registration do this… with a training set of one… with 99.9999% probability?

Can your image registration do this… with a training set of one… with 99.9999% probability?

The great part about all this is that we haven’t even gotten to the machine learning part yet. With sights set on a future of adaptive planning, radiology and radiation oncology departments need 3D DIR to be fast, precise, accurate, and reliable. Autofuse image registration offers the speed demanded by radiologists as well as the precision and accuracy required by oncologists – the best of both worlds.

1 thought on “Machine Perception vs. AI”

Comments are closed.