Kris T. Huang, MD, PhD, CTO

Deep learning is a tool. Machine perception is a potential resultant ability, the ability of a machine to interpret data in a manner similar to humans. Being (very) loosely patterned after biological systems, deep neural networks (DNNs) are able to accomplish certain tasks, like image classification or playing Go, with apparent human-like and at times even super-human skill. With performance like that, it is easy to believe (i.e., extrapolate), that its behavior is human-like, or perhaps in some way better.

[Image credit: DeepMind]

[Image credit: DeepMind]

While the low-level details of deep learning are known quantities (it is software, after all), recent research attempting to gain a higher level understanding of how it solves problems reveals a number of fundamental differences between how humans and deep learning systems approach a problem. Given the introduction of these systems into safety-critical industries like medicine, it is important to understand the quirks, limitations, and vulnerabilities of deep learning. It is interesting that we accept these behaviors in the systems we use, and yet for human colleagues to exhibit these behaviors would raise serious concerns, even alarm.

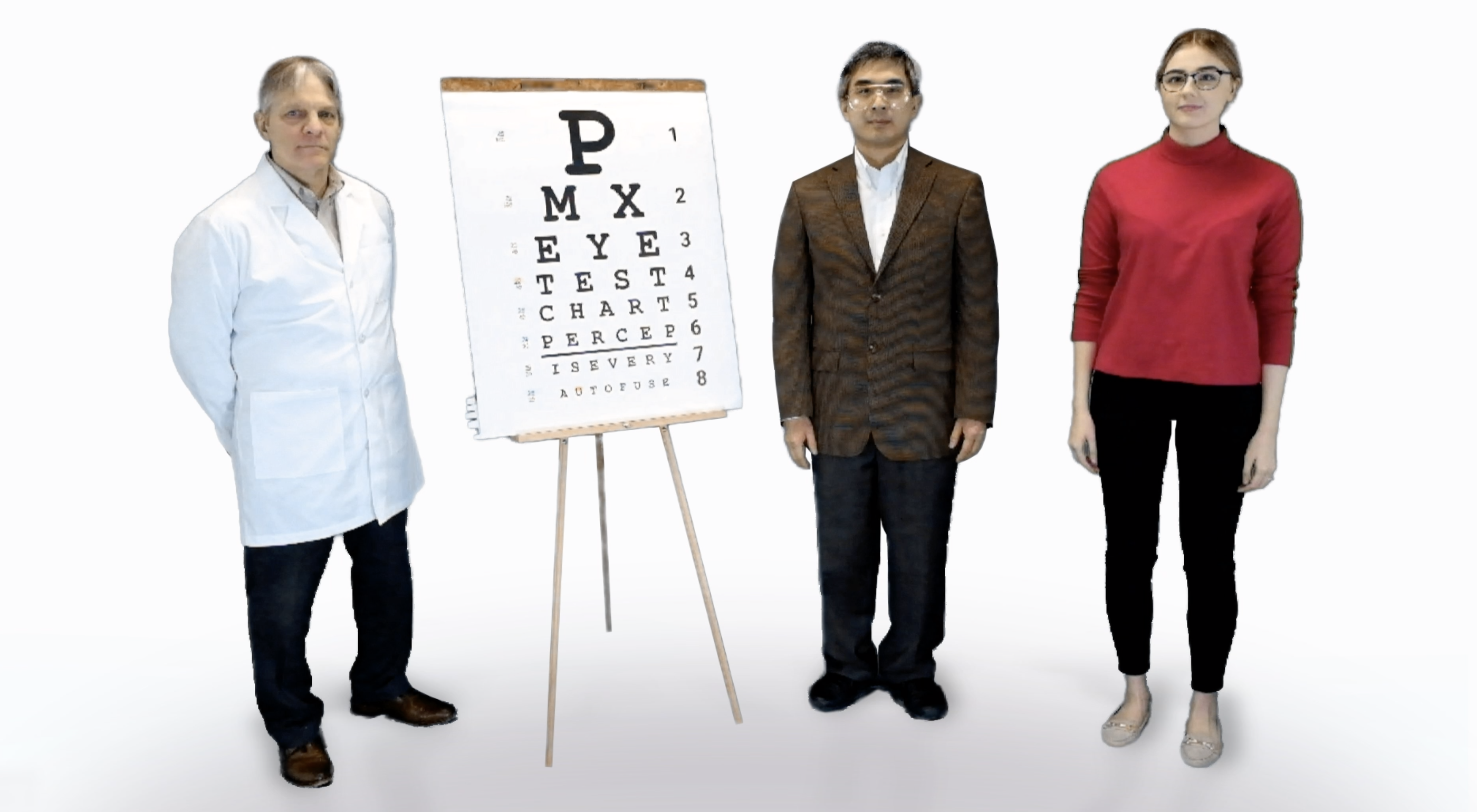

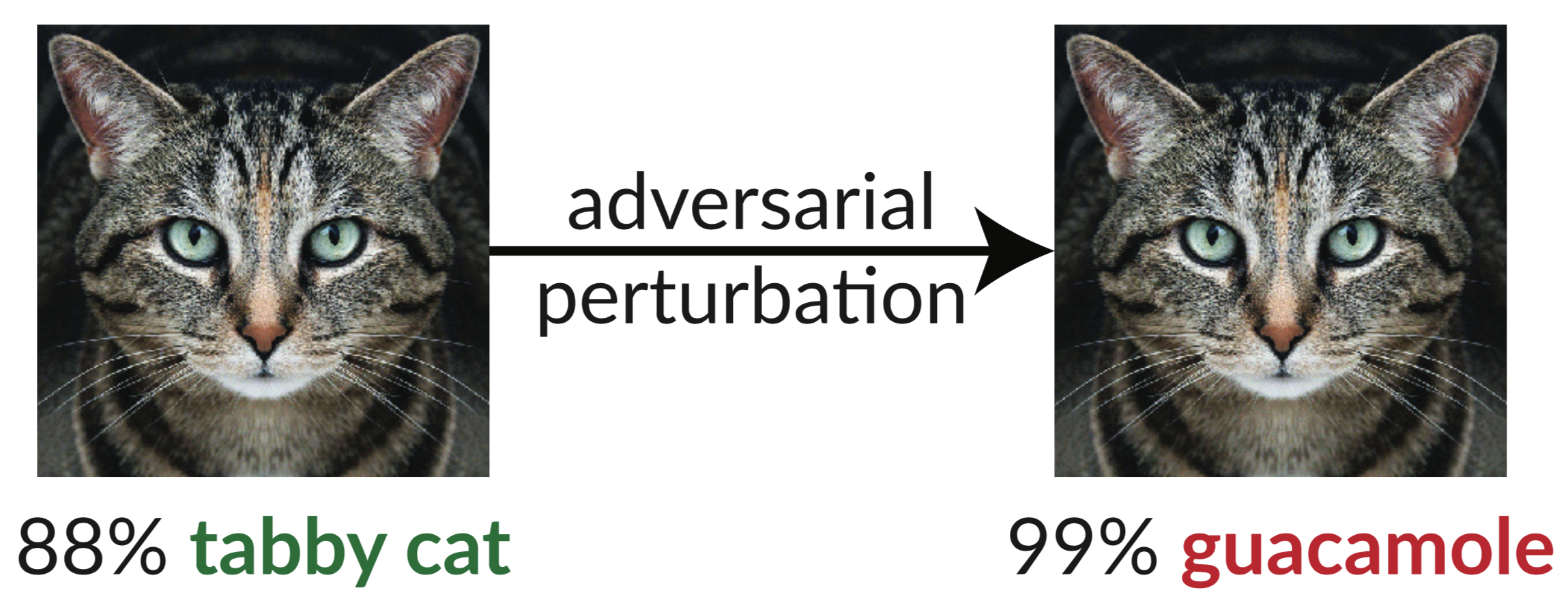

In “The Eye Test,” I personify a hypothetical deep learning image classification system participating in a recognition test, based on research work presented at ICML 2018 [1]. The first image, a tabby cat, is easy enough. On the second image, though, there’s something amiss. Deep Learning has detected a major change in the image, while the human test administrator still sees a cat, as does Machine Perception. Suspecting some trickery on the part of the tester, I nevertheless declare with confidence that I see guacamole. By the third image, I begin to have less confidence in my answer, but whatever it is I think I’m seeing, it isn’t a cat.

[Source: (1)]

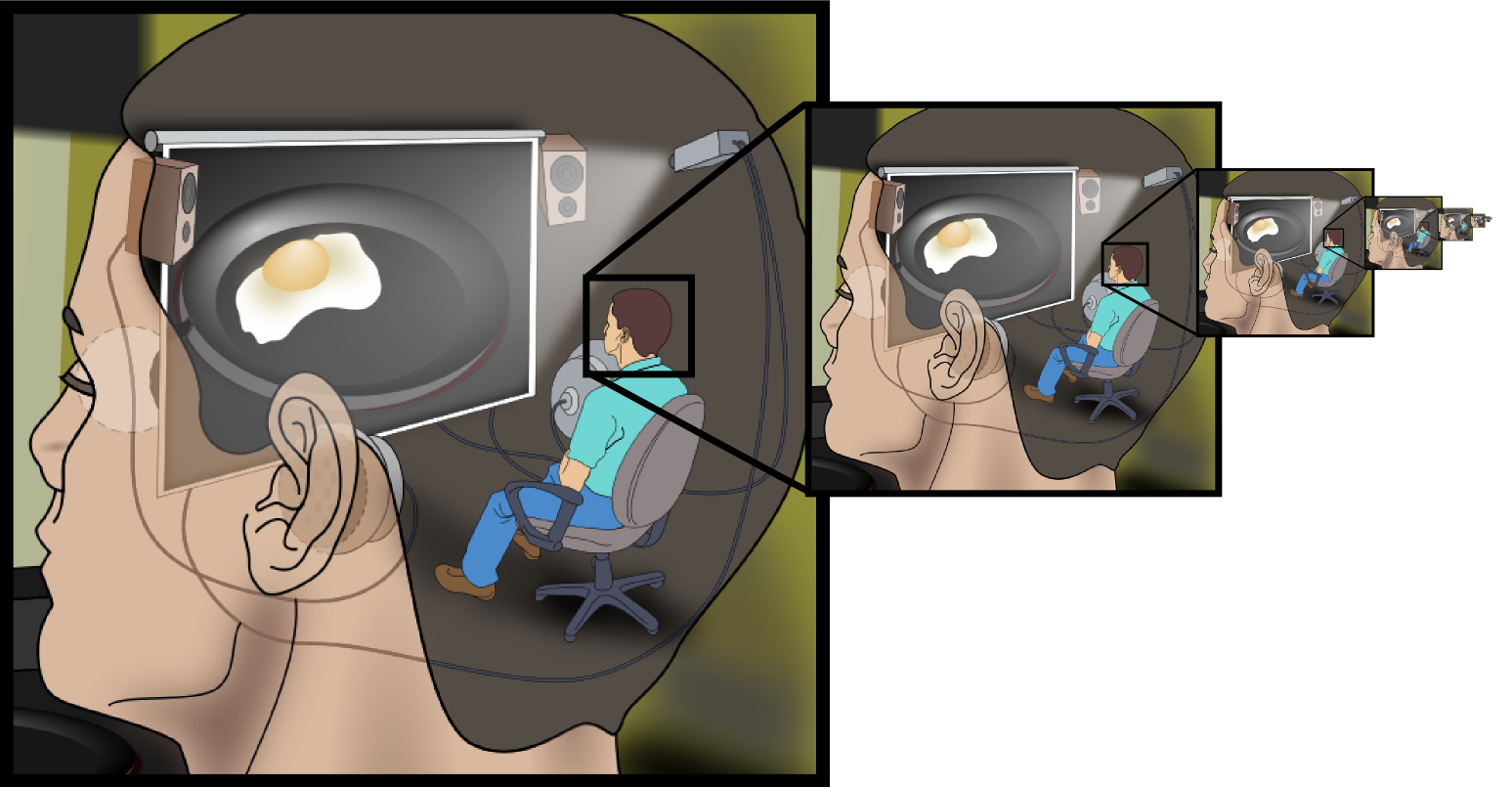

This touches on a related problem: a DNN can report how confident it is in its answer, but it doesn’t yet explain how or why. Work has been done on this front, typically using a second neural network to interpret the activations and results of the first. Using another neural network to generate explanations for a DNN sounds a bit circular, but considering that many DNNs often have many more parameters than the inputs they classify (Inception v3 has about 25 million parameters and takes 0.27 megapixel images as input [2], for example), it’s probably what we’re stuck with for the time being. Until we find a better way, it’s DNNs all the way down.

[By Original work: Jennifer Garcia (User:Reverie)Derivative work: User:Pbroks13Derivative work of derivative work: User:Was a bee – File:Cartesian_Theater.svg, CC BY-SA 2.5, https://commons.wikimedia.org/w/index.php?curid=20397601]

Even at our fairly early stage in understanding deep learning, it is becoming clear that, DNNs are probably not “learning” what we think they should when left to their own devices [3]. Because of the real or manufactured association between human and machine learning, terms like “supervised learning” often take on conflated contexts outside of their more specialized fields. If we consider “supervised learning” to mean “learning from labeled data,” as it is used in machine learning, it is easier to realize that we are essentially providing DNNs answers without explanations or insight, and we should not be surprised when we find that the machine does not, in fact, exhibit insight about the contents of the data.

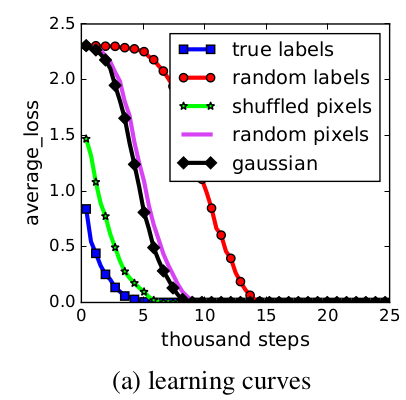

[Source: (4)]

[Source: i.pinimg.com]

Even the term “generalization,” another victim of conflation with the lay definition, requires rethinking, according to AI researchers from MIT and Google [4]. They found that state-of-the-art DNNs will happily train and fit randomly labeled data and even completely unstructured random noise. In other words, it will “learn” something, whether you like it or not, and whether or not there is actually anything there. This explains, at least partially, why DNNs can report something very wrong with high confidence.

If deep learning were human, I’d definitely recommend a thorough eye test.

References

- Athalye, A., Carlini, N. & Wagner, D. Obfuscated Gradients Give a False Sense of Security: Circumventing Defenses to Adversarial Examples. ArXiv180200420 Cs (2018).

- Models and examples built with TensorFlow. https://github.com/tensorflow/models (tensorflow, 2019).

- Brendel, W. Neural Networks seem to follow a puzzlingly simple strategy to classify images. Bethgelab (2019).

- Zhang, C., Bengio, S., Hardt, M., Recht, B. & Vinyals, O. Understanding deep learning requires rethinking generalization. arXiv:1611.03530 [cs] (2016).